Stay in your human loop

How vibe-coding made me more intentional

I drafted up a Slack message, copied the text, pasted it in Claude, and was typing “how does this sound?” when I paused, thought “dude, this email is 3 sentences long...” and started typing up this poast.

Last week I did a lot of vibe coding and it made me feel powerful and omniscient. The powerful feeling comes from as Jasmine and Lucas describe, “parkour vision,” where every wall becomes a floor and the world becomes malleable. I think about all the ideas that I’ve always wanted to build, but never had time to sink my teeth into. These ideas tend to build upon each other. I think about how I can improve each component of the system so I can spend more time generating ideas. I generate more ideas. More ideas to improve the ideas. This is incredible!! Not only do I not have to know the exact code to build these ideas, I also don’t have to spend any of my time building them. Claude does it for me!!

Like Igor on Claude Nine, this power begins to permeate beyond the terminal. Each problem I have can either be automated or sped up with my assistant. I can refine my behavioral interview responses, get feedback on a blogpost, and summarize 8 bajillion papers. All in different threads! I push Command+Tab, context switching as fast as my processor’s clock. Sometimes I forget I asked for recipe ideas two days ago, but usually I remember the important stuff.

And I’m not just generating, I’m validating. I easily get validation to quell any anxiety I experience. And that’s how I landed at screenshotting my three sentence message and asking Claude to verify that I sounded like a normal human.

Sometimes though, I stop to think and it doesn’t sit right to me. I imagine this is what it feels like going about your day sucking on a vape. I question my approach and the offloading I’m doing with AI.

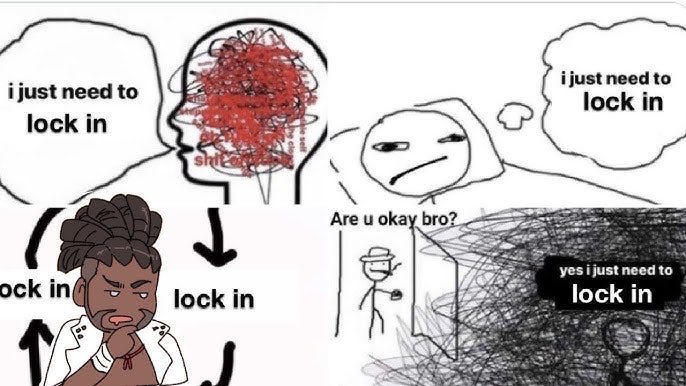

I am generally confused by the large variance in takes on AI use. I’ve read a lot of different takes on vibe coding. There is a plethora of take about its benefits and its dangers. The discourse is a game of pong between X takes and Hacker News comments:

“Are we to believe the uplift studies?”

“Anthropic writes 100% of their code with Claude!”

“So does Open AI!”

“What about that study from Anthropic on learning with AI?”

“But I swear, AI coding is the worst!”

Because of this whiplash, I have been dumping and shaping my thoughts in this post as an exercise for the author. Before the “bro, it’s not that deep” thought comes barreling down the tracks, I want to appeal to the argument that it is important to think critically about our AI use. By thinking critically, I’m forming my own opinion that helps me from being magnetized to memetic arguments. In short, I want to find my balance between being a luddite and a sensationalist.

For the uninitiated, I previously worked as the founding engineer at a startup and recently have been working on interpretability, evaluations, and my critical thinking abilities. Relative to my peers in SF, I would place myself as Somewhat Skeptical on the scale of AI Skeptic to AI Champion. I have felt both highs and lows while offloading engineering tasks to coding assistants. I have built web applications faster than I did 4 years ago, and also I’ve rolled with code I didn’t fully grasp. I’ve savvily caught generated bugs or hallucinations, and also covered edge cases with generated code.

As I have been learning ML and interpretability, I have been cautious with including assistants in my process. I’ve snoozed Cursor Tab when learning PyTorch, read and annotated papers by hand, and reimplemented basic techniques such as Monte-Carlo tree search. Don’t worry accelerationists! I have not gone completely O’Natural! To get some speedup, I’ve been using AI to critique my code after a manual first pass (typically in Cursor Ask Mode) and I’ve made prompts to generate paper summaries or code examples for me to practice.

I generally subscribe to the argument that AI is like a calculator and that it allows us to speed up tasks we are slower at. I’m reminded of my Linear Algebra class where our professor had us prove proficiency in matrix multiplication, and then all further exams were proof-based. Regardless of my calculator use, I think there is a lot of value in knowing how to multiply two numbers in my head quickly. I’m not doing matmuls in in my head, but if the calculation was a blackbox to me, I would have a very hard time understanding the intuition behind linear transformations.

So far, the best method I’ve employed, to decide whether to include AI in the process, has been to branch depending on the type of goal. I mainly think of this in terms of process and outcome goal buckets. For example, if I am trying to learn so that I can do the task on my own, then I know I have to do it myself. The goal of learning is a process goal. A tactical suggestion for process goals I have adopted, from Andrej Karpathy (substack quote), is to not copy and paste from AI coding assistants and treat them as a more efficient search method, a better Stack Overflow. Neel Nanda has additional suggestions for using LLMs during learning.

Now, if the goal is outcome based, like “build a todo-list app,” this is a clear task for Claude. The key crux here is that I already know how to build a todo-list app and I do not think that I will see compounding returns from me doing this without assistants. So I really only care about the outcome, and don’t think there are high marginal returns to struggling through the process. This clear bifurcation works when the goal is only process or outcome. In that case, all you have to do is be intentional and clear about what you want to achieve.

Unfortunately, I think that the majority of my tasks are driven by a mix of outcome and process goals. Take the aforementioned message as an example. I was asking someone for a favor, which could be an outcome goal: “receive comments on blog post.” Focused on the output, I took a screenshot and pasted it into Claude, typing “how does this message sound.” However, I paused for a second before sending the message and immediately a train of thought questioned this judgement. I realized I was offloading a task and seeking validation.

So, what am I going to do about it? Now, I’m prompt-engineering myself. I think of this as light guardrails or safe defaults that allow me to be intentional and press the gas pedal when needed. It’s like that little bit of friction before you delete something on your favorite SaaS app, getting you to pause and enter the human loop.

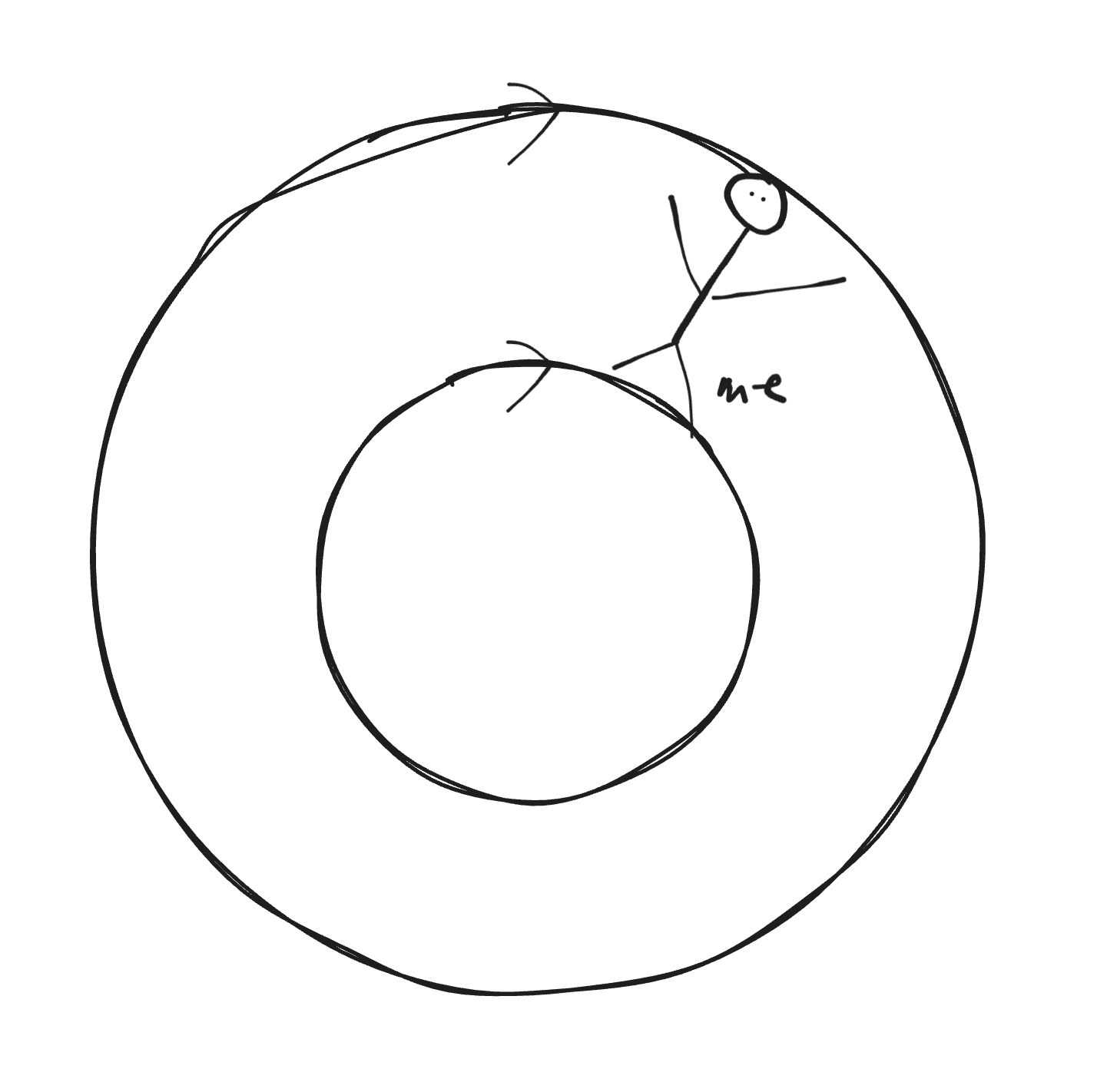

I’m thinking of my cognitive process as an inner and outer loop. The inner loop is focused on the task at hand, it’s focused on the day to day outcomes, the deliverables and the deadlines. The outer loop is longer term, focused on personal growth, learning, and hopes and dreams. Also, improvements to the outer loop generally help improve the inner loop. The inner loop is the outcome loop, the outer loop is the process loop.

side note: i’m not sold about this analogy, but I think one cool thing that works is that these loops function like a drivetrain. If the loops are connected, the faster the outer loop makes a rotation, the faster the inner loop will move!

I feel the least intentional when this inner loop is whirring and the outer loop is stalled. There is this sense of myopic productivity, churning through my todo list, blasting out emails, or forcefully typing words into my machine. This is when my pavlovian, hamster-wheel brain, downing coffee and moving todos around a kanban board, is happiest. “Ah,” it sighs, “look at all this value.”

This is when I feel closest to living life like an AI agent. My outer loop collapses and I am now just the inner loop. I’m on autopilot and I have become the agent.

With the right prompting, monitoring and interpretability, I separate the loops again, squeezing myself into this cozy space. Here, I hope to stay.

One last thing. What if you spend all your time in the outer loop? Yup, I’ve been there. Actually, I have that problem too, maybe I should write about it. Until then, here is what I think spending all your time in the outer loop looks like.

feedback request: if you liked this or have thoughts, I am a DM away. I’m starting a practice of writing more publicly and would greatly appreciate any feedback.